Paper Reports that Amino Acids Used by Life Are Finely Tuned to Explore "Chemistry Space" 4

A recent paper in Nature's journal Scientific Reports, "Extraordinarily Adaptive Properties of the Genetically Encoded Amino Acids," 3 has found that the twenty amino acids used by life are finely tuned to explore "chemistry space" and allow for maximal chemical reactions. Considering that this is a technical paper, they give an uncommonly lucid and concise explanation of what they did:

We drew 108 random sets of 20 amino acids from our library of 1913 structures and compared their coverage of three chemical properties: size, charge, and hydrophobicity, to the standard amino acid alphabet. We measured how often the random sets demonstrated better coverage of chemistry space in one or more, two or more, or all three properties. In doing so, we found that better sets were extremely rare. In fact, when examining all three properties simultaneously, we detected only six sets with better coverage out of the 108 possibilities tested. That's quite striking: out of 100 million different sets of twenty amino acids that they measured, only six are better able to explore "chemistry space" than the twenty amino acids that life uses. That suggests that life's set of amino acids is finely tuned to one part in 16 million. Of course they only looked at three factors -- size, charge, and hydrophobicity. When we consider other properties of amino acids, perhaps our set will turn out to be the best:

While these three dimensions of property space are sufficient to demonstrate the adaptive advantage of the encoded amino acids, they are necessarily reductive and cannot capture all of the structural and energetic information contained in the 'better coverage' sets. They attribute this fine-tuning to natural selection, as their approach is to compare chance and selection as possible explanations of life's set of amino acids: This is consistent with the hypothesis that natural selection influenced the composition of the encoded amino acid alphabet, contributing one more clue to the much deeper and wider debate regarding the roles of chance versus predictability in the evolution of life.

But selection just means it is optimized and not random. They are only comparing two possible models -- selection and chance. They don't consider the fact that intelligent design is another cause that's capable of optimizing features. The question is: Which cause -- natural selection or intelligent design -- optimized this trait?

To do so, you'd have to consider the complexity required to incorporate a new amino acid into life's genetic code. That in turn would require lots of steps: a new codon to encode that amino acid, and new enzymes and RNAs to help process that amino acid during translation. In other words, incorporating a new amino acid into life's genetic code is a multimutation feature.

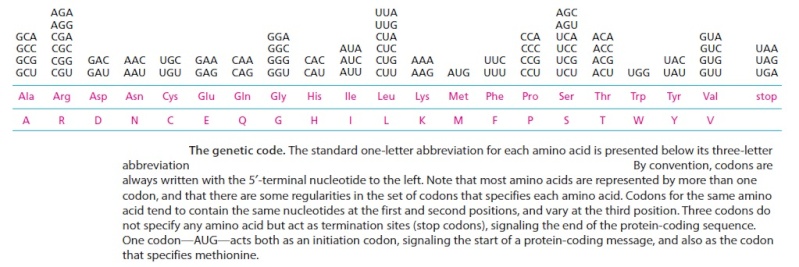

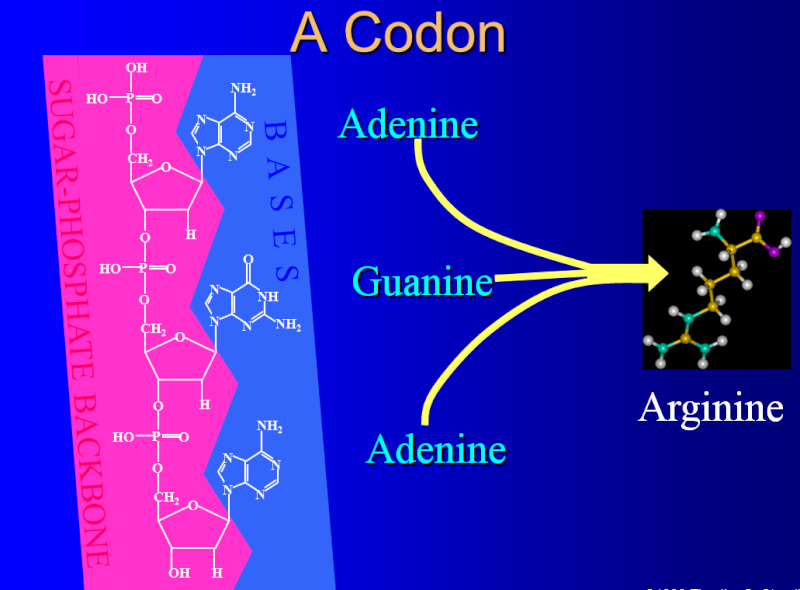

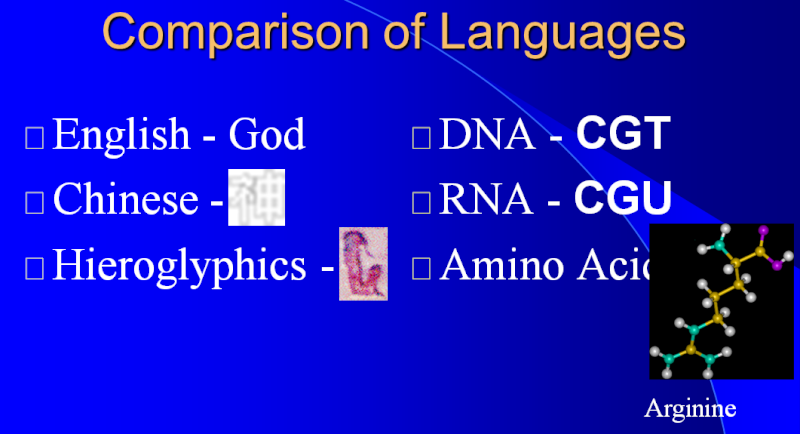

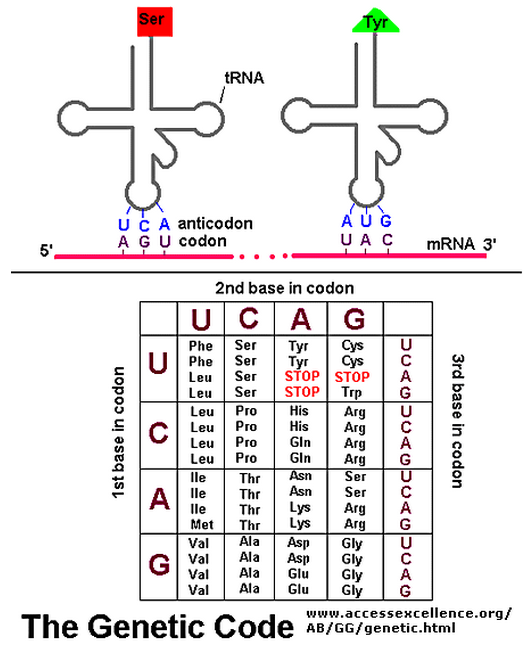

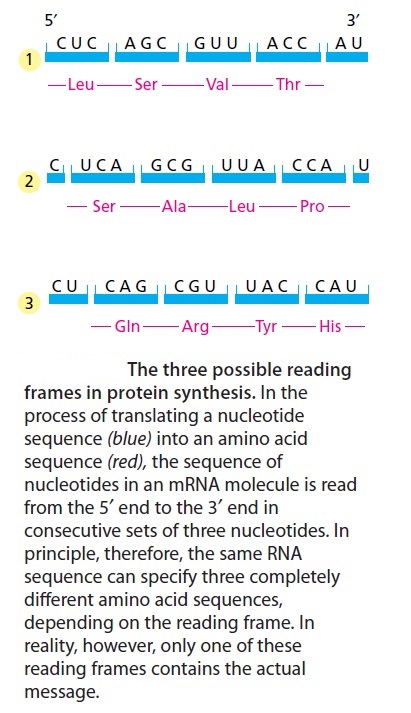

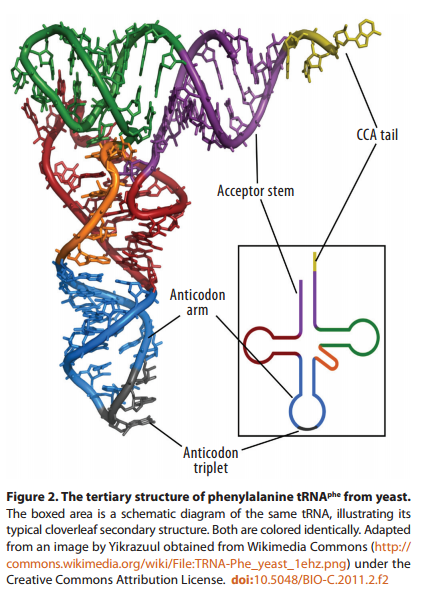

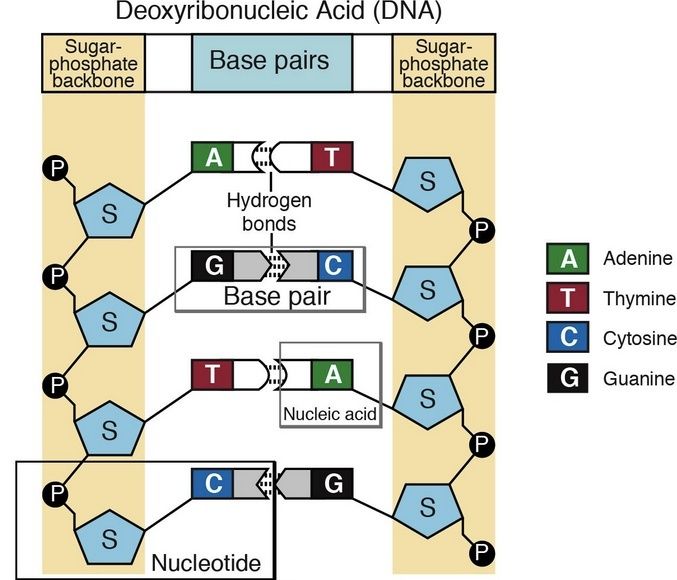

The biochemical language of the genetic code uses short strings of three nucleotides (called codons) to symbolize commands -- including start commands, stop commands, and codons that signify each of the 20 amino acids used in life. After the information in DNA is transcribed into mRNA, a series of codons in the mRNA molecule instructs the ribosome which amino acids are to be strung in which order to build a protein. Translation works by using another type of RNA molecule called transfer RNA (tRNA). During translation, tRNA molecules ferry needed amino acids to the ribosome so the protein chain can be assembled.

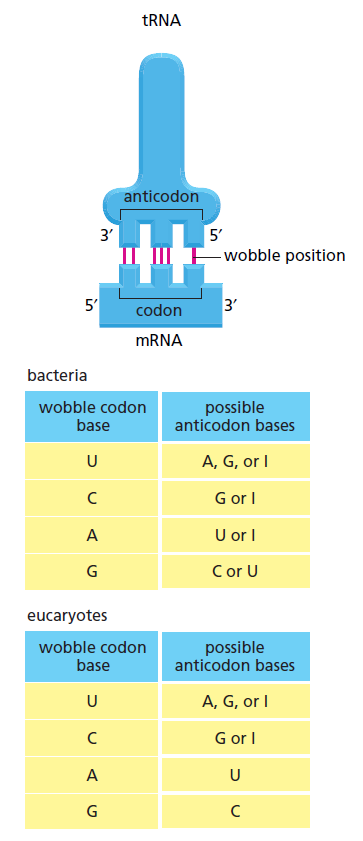

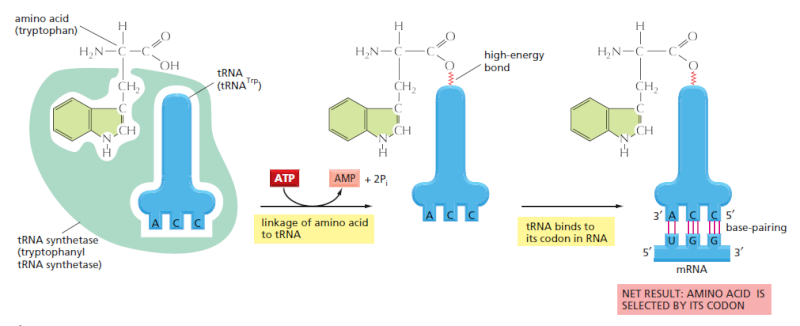

Each tRNA molecule is linked to a single amino acid on one end, and at the other end exposes three nucleotides (called an anti-codon). At the ribosome, small free-floating pieces of tRNA bind to the mRNA. When the anti-codon on a tRNA molecule binds to matching codons on the mRNA molecule at the ribosome, the amino acids are broken off the tRNA and linked up to build a protein.

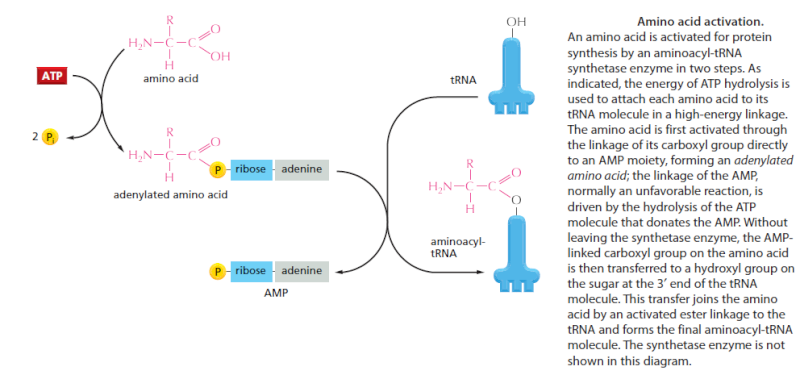

For the genetic code to be translated properly, each tRNA molecule must be attached to the proper amino acid that corresponds to its anticodon as specified by the genetic code. If this critical step does not occur, then the language of the genetic code breaks down, and there is no way to convert the information in DNA into properly ordered proteins. So how do tRNA molecules become attached to the right amino acid?

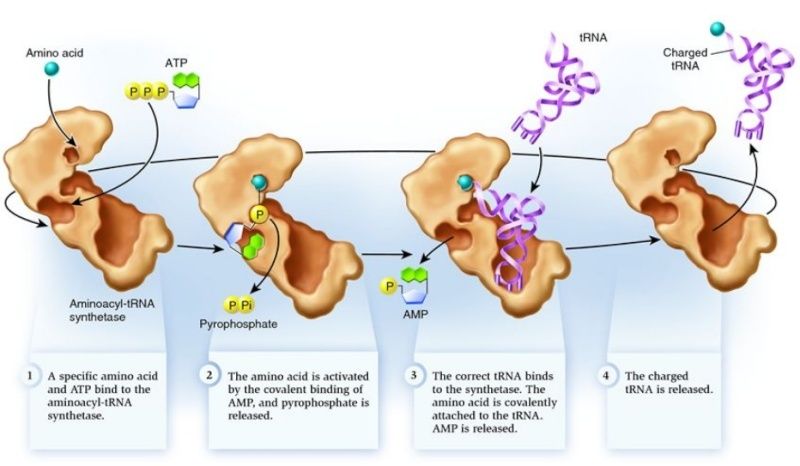

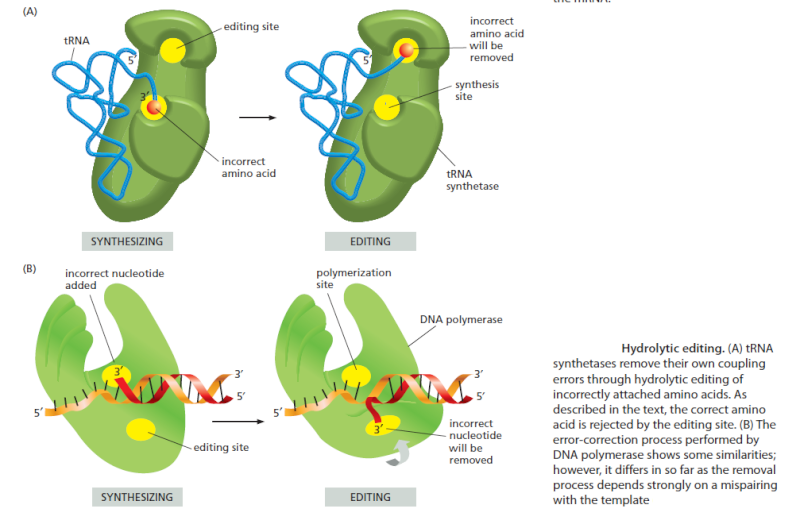

Cells use special proteins called aminoacyl tRNA synthetase (aaRS) enzymes to attach tRNA molecules to the "proper" amino acid under thelanguage of the genetic code. Most cells use 20 different aaRS enzymes, one for each amino acid used in life. These aaRS enzymes are key to ensuring that the genetic code is correctly interpreted in the cell.

Yet these aaRS enzymes themselves are encoded by the genes in the DNA. This forms the essence of a "chicken-egg problem": aaRS enzymes themselves are necessary to perform the very task that constructs them.

How could such an integrated, language-based system arise in a step-by-step fashion? If any component is missing, the genetic information cannot be converted into proteins, and the message is lost. The RNA world is unsatisfactory because it provides no explanation for how the key step of the genetic code -- linking amino acids to the correct tRNA -- could have arisen.

Few of the many possible polypeptide chains wiil be useful to Cells

Bruce Alberts writes in Molecular biology of the cell :

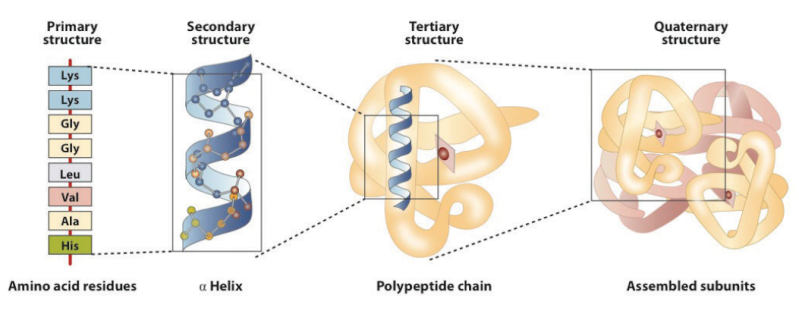

Since each of the 20 amino acids is chemically distinct and each can, in principle, occur at any position in a protein chain, there are 20 x 20 x 20 x 20 = 160,000 different possible polypeptide chains four amino acids long, or 20n different possible polypeptide chains n amino acids long. For a typical protein length of about 300 amino acids, a cell could theoretically make more than 10^390 different pollpeptide chains. This is such an enormous number that to produce just one molecule of each kind would require many more atoms than exist in the universe. Only a very small fraction of this vast set of conceivable polypeptide chains would adopt a single, stable three-dimensional conformation-by some estimates, less than one in a billion. And yet the vast majority of proteins present in cells adopt unique and stable conformations. How is this possible?

The complexity of living organisms is staggering, and it is quite sobering to note that we currently lack even the tiniest hint of what the function might be for more than 10,000 of the proteins that have thus far been identified in the human genome. There are certainly enormous challenges ahead for the next generation of cell biologists, with no shortage of fascinating mysteries to solve.

Now comes Alberts striking explanation of how the right sequence arised :

The answer Iies in natural selection. A protein with an unpredictably variable structure and biochemical activity is unlikely to help the survival of a cell that contains it. Such

proteins would therefore have been eliminated by natural selection through the enormously long trial-and-error process that underlies biological evolution. Because evolution has selected for protein function in living organisms, the amino acid sequence of most present-day proteins is such that a single conformation is extremely stable. In addition, this conformation has its chemical properties finely tuned to enable the protein to perform a particular catalltic or structural function in the cell. Proteins are so precisely built that the change of even a few atoms in one amino acid can sometimes disrupt the structure of the whole molecule so severelv that all function is lost.

Proteins are not rigid lumps of material. They often have precisely engineered moving parts whose mechanical actions are coupled to chemical events. It is this coupling of chemistry and movement that gives proteins the extraordinary capabilities that underlie the dynamic processes in living cells

Now think for a moment . It seems that natural selection ( does that not sound soooo scientific and trustworthy ?! ) is the key answer to any phenomena in biology, where there is no scientific evidence to make a empricial claim. Much has been written about the fact that natural selection cannot produce coded information. Alberts short explanation is a prima facie example about how main stream sciencists make without hesitation " just so " claims without being able to provide a shred of evidence, just in order to mantain a paradigm on which the scientific establishment relies, where evolution is THE answer to almost every biochemical phenomena. Fact is that precision, coded information, stability, interdependence and irreducible complexity etc. are products of intelligent minds. The author seems also to forget that natural selection cannot occur before the first living cell replicates. Several hundred proteins had to be already in place and fully operating in order to make even the simplest life possible

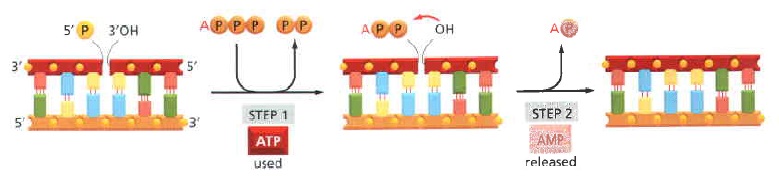

Amino acids link together when the amino group of one amino acid bonds to the carboxyl group of another. Notice that water is a by-product of the reaction (called a condensation reaction).

Stephen Meyer writes in Signature of the cell:

According to neo-Darwinian theory, new genetic information arises first as random mutations occur in the DNA of existing organisms. When mutations arise that confer a survival advantage on the organisms that possess them, the resulting genetic changes are passed on by natural selection to the next generation. As these changes accumulate, the features of a population begin to change over time. Nevertheless, natural selection can "select" only what random mutations first produce. And for the evolutionary process to produce new forms of life, random mutations must first have produced new genetic information for building novel proteins. That, for the

mathematicians, physicists, and engineers at Wistar, was the problem. Why?

The skeptics at Wistar argued that it is extremely difficult to assemble a new gene or protein by chance because of the sheer number of possible base or amino-acid sequences. For every combination of amino acids that produces a functional protein there exists a vast number of other possible combinations that do not. And as the length of the required protein grows, the number of possible amino-acid sequence combinations of that length grows exponentially, so that the odds of finding a functional sequence—that is, a working protein—diminish precipitously.

To see this, consider the following. Whereas there are four ways to combine the letters A and B to make a two-letter combination (AB, BA, AA, and BB), there are eight ways to make three-letter combinations (AAA, AAB, ABB, ABA, BAA, BBA, BAB, BBB), and sixteen ways to make four-letter combinations, and so on. The number of combinations grows geometrically, 22, 23, 24, and so on. And this growth becomes more pronounced when the set of letters is larger. For protein chains, there are 202, or 400, ways to make a two-amino-acid combination, since each position could be any one of 20 different alphabetic characters. Similarly, there are 203, or 8,000, ways to make a three-amino-acid sequence, and 204, or 160,000, ways to make a sequence four amino acids long, and so on. As the number of possible combinations rises, the odds of finding a correct sequence diminishes correspondingly. But most functional proteins are made of hundreds of amino acids. Therefore, even a relatively short protein of, say, 150 amino acids represents one sequence among an astronomically large number of other possible sequence combinations (approximately 10^195).

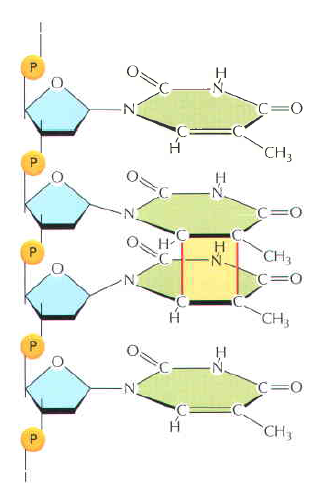

Consider the way this combinatorial problem might play itself out in the case of proteins in a hypothetical prebiotic soup. To construct even one short protein molecule of 150 amino acids by chance within the prebiotic soup there are several combinatorial problems—probabilistic hurdles—to overcome. First, all amino acids must form a chemical bond known as a peptide bond when joining with other amino acids in the protein chain

Consider the way this combinatorial problem might play itself out in the case of proteins in a hypothetical prebiotic soup. To construct even one short protein molecule of 150 amino acids by chance within the prebiotic soup there are several combinatorial problems—probabilistic hurdles—to overcome. First, all amino acids must form a chemical bond known as a peptide bond when joining with other amino acids in the protein chain (see Fig. 9.1). If the amino acids do not link up with one another via a peptide bond, the resulting molecule will not fold into a protein. In nature many other types of chemical bonds are possible between amino acids. In fact, when amino-acid mixtures are allowed to react in a test tube, they form peptide and nonpeptide bonds with roughly equal probability. Thus, with each amino-acid addition, the probability of it forming a peptide bond is roughly 1/2. Once four amino acids have become linked, the likelyhood that they are joined exclusively by peptide bonds is roughly 1/2 × 1/2 × 1/2 ×

1/2 = 1/16, or (1/2)4. The probability of building a chain of 150 amino acids in which all linkages are peptide linkages is (1/2)149, or roughly 1 chance in 10^45.

Second, in nature every amino acid found in proteins (with one exception) has a distinct mirror image of itself; there is one left-handed version, or L-form, and one right-handed version, or D-form. These mirror-image forms are called optical isomers (see Fig. 9.2). Functioning proteins tolerate only left-handed amino acids, yet in abiotic amino-acid production the right-handed and left-handed isomers are produced with roughly equal frequency. Taking this into consideration further compounds the improbability of attaining a biologically functioning protein. The probability of attaining, at random, only L-amino acids in a hypothetical peptide chain 150 amino acids long is (1/2)150, or again roughly 1 chance in 1045. Starting from mixtures of D-forms and L-forms, the probability of building a 150-amino-acid chain at random in which all bonds are peptide bonds and all amino acids are L-form is, therefore, roughly 1 chance in 1090.

Second, in nature every amino acid found in proteins (with one exception) has a distinct mirror image of itself; there is one left-handed version, or L-form, and one right-handed version, or D-form. These mirror-image forms are called optical isomers . Functioning proteins tolerate only left-handed amino acids, yet in abiotic amino-acid production the right-handed and left-handed isomers are produced with roughly equal frequency. Taking this into consideration further compounds the improbability of attaining a biologically functioning protein. The probability of attaining, at random, only L-amino acids in a hypothetical peptide chain 150 amino acids long is (1/2)150, or again roughly 1 chance in 10^45. Starting from mixtures of D-forms and L-forms, the probability of building a 150-amino-acid chain at random in which all bonds are peptide bonds and all amino acids are L-form is, therefore, roughly 1 chance in 10^90.

Functioning proteins have a third independent requirement, the most important of all: their amino acids, like letters in a meaningful sentence, must link up in functionally specified sequential arrangements. In some cases, changing even one amino acid at a given site results in the loss of protein function. Moreover, because there are 20 biologically occurring amino acids, the probability of getting a specific amino acid at a given site is small—1/20. (Actually the probability is even lower because, in nature, there are also many nonprotein-forming amino acids.) On the assumption that each site in a protein chain requires a particular amino acid, the probability of attaining a particular protein 150 amino acids long would be (1/20)150, or roughly 1 chance in 10^195.

How rare, or common, are the functional sequences of amino acids among all the possible sequences of amino acids in a chain of any given length?

Douglas Axe answered this question in 2004 3 , and Axe was able to make a careful estimate of the ratio of (a) the number of 150-amino-acid sequences that can perform that particular function to (b) the whole set of possible amino-acid sequences of this length. Axe estimated this ratio to be 1 to 10^77.

This was a staggering number, and it suggested that a random process would have great difficulty generating a protein with that particular function by chance. But I didn't want to know just the likelihood of finding a protein with a particular function within a space of combinatorial possibilities. I wanted to know the odds of finding any functional protein whatsoever within such a space. That number would make it possible to evaluate chance-based origin-of-life scenarios, to assess the probability that a single protein—any working protein—would have arisen by chance on the early earth.

Fortunately, Axe's work provided this number as well.17 Axe knew that in nature proteins perform many specific functions. He also knew that in order to perform these functions their amino-acid chains must first fold into stable three-dimensional structures. Thus, before he estimated the frequency of sequences performing a specific (beta-lactamase) function, he first performed experiments that enabled him to estimate the frequency of sequences that will produce stable folds. On the basis of his experimental results, he calculated the ratio of (a) the number of 150-amino-acid sequences capable of folding into stable "function-ready" structures to (b) the whole set of possible amino-acid sequences of that length. He determined that ratio to be 1 to 10^74.

In other words, a random process producing amino-acid chains of this length would stumble onto a functional protein only about once in every 10^74 attempts.

When one considers that Robert Sauer was working on a shorter protein of 100 amino acids, Axe's number might seem a bit less prohibitively improbable. Nevertheless, it still represents a startlingly small probability. In conversations with me, Axe has compared the odds of producing a functional protein sequence of modest (150-amino-acid) length at random to the odds of finding a single marked atom out of all the atoms in our galaxy via a blind and undirected search. Believe it or not, the odds of finding the marked atom in our galaxy are markedly better (about a billion times better) than those of finding a functional protein among all the sequences of corresponding length.

1) https://answersingenesis.org/origin-of-life/the-origin-of-life-dna-and-protein/

2) B.Alberts Molecular biology of the cell.

3) http://www.ncbi.nlm.nih.gov/pubmed/15321723

4) http://www.evolutionnews.org/2015/06/paper_reports_t096581.html

http://elshamah.heavenforum.org/t2062-proteins-how-they-provide-striking-evidence-of-design#3552